Creativity is considered to be a bastion of humanness and somewhat outside of the realm of artificial intelligence. But AI can be used to generate variations of artistic themes that appear to be creations of their own.

But I would say this computer based creativity is a reflection of the creativity of the programmers who are building the algorithms that simulate this most human trait.

Openai has created a program called DALL-E 2 that can create or change images based on textual descriptions. The striking capabilities of this technology has vast implications for creativity as part of the field of synthetic media. Deep fakes - AI generated images, video and audio based on source files - can create videos of people saying and doing things that have never happened. DALL-E 2 adds a very interesting capability by interpreting textual descriptions and combining concepts with an artistic style or enabling visual elements to be added that are consistent with the subject style and blend in various natural effects such as lighting and shadows. The result is amazing.

The key to these capabilities is having the correct training data (no surprise) - images labeled with concepts that tell the algorithm the characteristics of a cat for example. One image was of a “cute cat” (one could argue that most cats are pretty cute - that is if you like cats) - the program had to be trained on cute cats. But the question I have is whether this is “post-coordinated” or “pre-coordinated“ - was the training on “cats” and separately on “cuteness” (post-coordinated - just as ecommerce sites use separate facets to filter products) or on “cute cats”. (So called pre-coordinated or combined into a single concept) My guess is the latter due to the subjectivity of what “cute” is.

Image credit: https://daleonai.com/dalle-5-mins

When working on a digital asset management project a couple years back we had to define ambiguous and subjective attributes. One image of lollipops with faces on them was interpreted as cute by some and creepy by others.

Images are notoriously difficult to describe using text descriptions. But consider the labels as handles on existing images rather than descriptions of the image. Therefore the training data - the images representing concepts - needs to be carefully chosen to define the inputs to DALL-E. This is generally true of any AI technology. In many cases the data is more important than the algorithm. Humans select training data and ultimately humans have to label that data.

The application of algorithms to creative endeavors allow humans to use judgment in selecting and evaluating various AI generated outputs. This is a great example of augmentation of of distinctly human abilities that can improve human creativity and productivity. They depend on the right training data correctly labeled just as in every application of AI.

Here's an explanation of the CLIP model that DALL-E 2 uses to connect text and images:

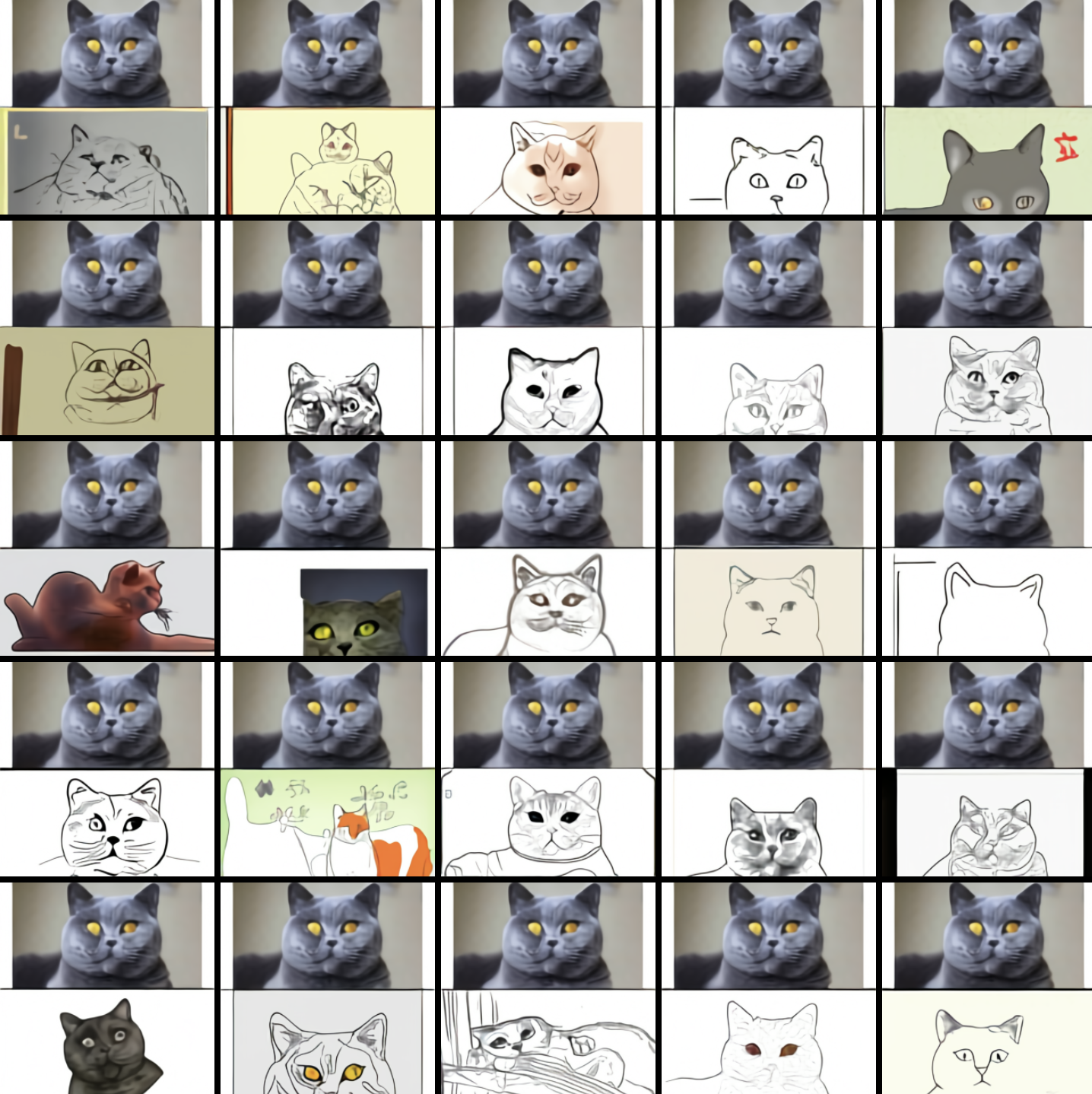

A gallery of some DALL-E 2 generated art:

https://www.instagram.com/twominutepapers/

Another highly geeky deep dive into OpenAI's paper on DALL-E 2: