A very common complaint from people using content management systems is “Search doesn’t work.” Now, that’s a broad statement and probably needs a little refinement to get to the actual problem. But, you should ask yourself if you have done all that you can to ensure that the most relevant search results are getting displayed at the top of the search results list.

There is a lot you can do to improve relevancy through configuring and tuning your search engine. But, another very important -- and undervalued -- factor in improving results is metadata. Accurately tagged metadata for unstructured content provides the necessary structure that is key for the search engine to find the best matches. Even if the metadata field is being used as a facet in faceted search, accurate tagging will help refine search results to only the most relevant.

Manual tagging can be very good if the indexer is familiar with the purpose of tagging, knows what to look for in the document, and is able to take the time needed to update all of the applicable tags. I worked for a company that had about 30 metadata fields that each content creator had to review. Some content creators wanted to make sure every metadata field was tagged with something. That’s a huge chunk of time they were spending when the end result was not helpful to the search results. On the other hand, some content creators wanted to get their documents into the repository and be done with it, and they only tagged what was absolutely necessary. You can imagine the results. The content creator who entered tags in every metadata field had content showing up in many different searches even though that content might not be relevant. The content that had minimal tagging did not show up in search results when it should have.

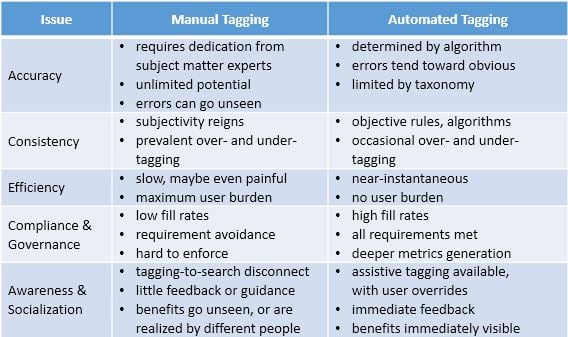

The lack of consistency is a problem that cannot be fixed by the search engine and makes it appear as though, “Search doesn’t work.” One benefit of auto-classification is its consistency. Auto-classification is not able to tag every piece of content exactly right every time; however, the “rules” that are established for auto-classification will be used consistently for every piece of content. The auto-classifier will not update metadata fields with tags that don’t show up in the document. And, the tags that do show up in the document will be ones that do show up in the document. There will be a significant reduction in under-tagged metadata fields and over-tagged metadata fields. The chart below identifies additional differences between manual and automated classification:

The main obstacle to using auto-classification is the upfront time and cost commitment. While time and cost are of no small consequence, the results of the commitment might actually save money and improve worker productivity in the long run. Where manual tagging has an ongoing cost in employee time, auto-classification has primarily an initial cost to purchase the software and either “train” the system or write the rules needed to auto-classify content. There may be some system training or rule-writing changes over time, but it should only be refinements to the existing setup.

For a look into how we use customer data models, product data models, content models, and knowledge architecture to create a framework for unified commerce download our whitepaper: Attribute-Driven Framework for Unified Commerce